02

2024-10

NASA, IBM just open sourced an AI climate model so you can fine-tune your own

Researchers at IBM and NASA this week released an open source AI climate model designed to accurately predict weather patterns while consuming fewer compute resources compared to traditional physics-based simulations.

Developed as part of a collaboration between IBM and NASA with help from the US Department of Energy's Oak Ridge National Laboratory, the 2.3 billion parameter foundation model called Prithvi WxC was trained on 40 years of observation data from NASA's Modern-Era Retrospective Analysis for Research and Applications, Version 2 (MERRA-2) dataset.

Despite the model's diminutive size, researchers say it was still able to accurately generate global surface temperatures using a random sample that contained just 5 percent of the original data. They also believe the model is particularly well suited to simulating the behavior of hurricanes and atmospheric rivers. However, the model's real advantage may just be its flexibility.

IBM and NASA aren't the only ones experimenting with AI models for weather and climate forecasting. For example, researchers at Google detailed a novel approach to bolstering the accuracy of forecasts by augmenting existing physics models with machine learning. Meanwhile, Nvidia has been hard at work expanding the capabilities of its Earth-2 climate models.

What sets IBM and NASA's efforts apart is that Prithvi WxC is a foundation model, which means it can be adapted to serve any number of use cases ranging from short-term weather forecasting to long-term climate projections.

"This space has seen the emergence of large AI models that focus on a fixed dataset and single use case — primarily forecasting. We have designed our weather and climate foundation model to go beyond such limitations so that it can be tuned to a variety of inputs and uses," Juan Bernabe-Moreno, director of IBM Research Europe, said in a statement this week.

To support the creation of new climate models based on Prithvi WxC, IBM and NASA have released it on Hugging Face alongside a pair of fine-tuned models designed for climate and weather downscaling and gravity wave parameterization.

If you're not familiar with weather downscaling, it refers to taking low-resolution inputs, like temperature precipitation, or wind speed, from a larger-scale model and using statistical or dynamic probabilities to generate a higher resolution forecast. Gravity waves on the other hand are a phenomenon which affect various atmospheric processes including cloud formation and even aircraft turbulence.

The goal that researchers around the globe will be able to take these models and adapt them to their particular needs, whether that be improving warning times for severe weather or improving global climate simulations.

"The NASA foundation model will help us produce a tool that people can use [for] weather, season and climate projections to help inform decisions on how to prepare, respond, and mitigate," Karen St Germain, director of NASA's Earth Science Division, said in a statement.

And because Prithvi WxC is so small, this may not even require that much compute, at least not compared to the large language models that power AI chatbots like Copilot or Gemini. According to the paper, the model was trained from scratch using a relatively small cluster of 64 Nvidia A100s.

In theory, fine-tuning the model should require far, far less than that, making it well within reach for various climate centers, many of which are already upgrading their supercomputing clusters with GPU partitions.

According to IBM, one of the first to put the model to use is the Canadian government, which has begun adapting the model to include additional weather forecasting use cases. In particular, Environment and Climate Change Canada (ECCC), the department heading up the project, is looking to use the model for very short-term precipitation forecasts by feeding real-time radar data into the model. The ECCC is also experimenting with downscaling to generate forecasts down to kilometer scale.

-

29

2025-05

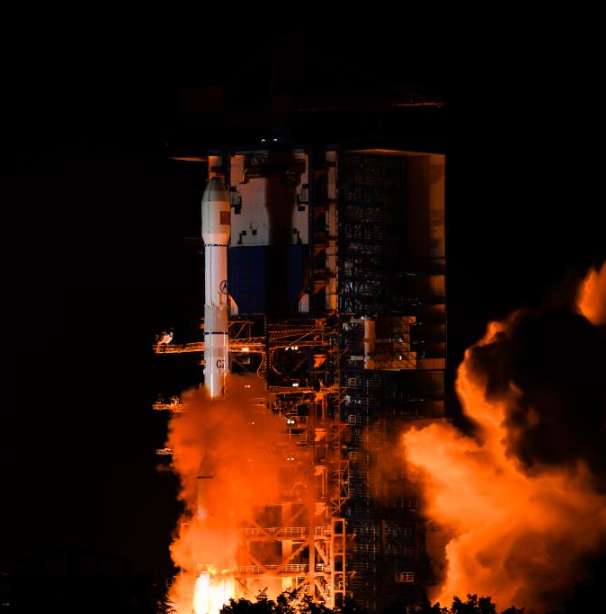

Tianwen-2 Mission Launched Successfully

At 1:31 AM today, China successfully launched the Tianwen-2 planetary exploration probe from the Xichang Satellite Launch Center using the Long March-3B Y110 carrier rocket.

-

13

2025-05

Communication Technology Experiment Satellite No. 19 Successfully Launched

At 2:09 on May 13, China successfully launched the Communication Technology Experiment Satellite No. 19 from the Xichang Satellite Launch Center using a Long March 3B carrier rocket. The satellite smoothly entered its predetermined orbit, and the launch mission was a complete success.

-

12

2025-05

Remote Sensing Satellite No. 40, Group 02, Successfully Launched

On May 11 at 21:27, China successfully launched the Remote Sensing Satellite No. 40, Group 02, from the Taiyuan Satellite Launch Center using a Long March 6A carrier rocket. The satellite entered its predetermined orbit smoothly, and the launch mission was a complete success.